« Data.gov Hey, where’s the RAW data? | Automated data profiling and reporting- Data quality behavioral modification? »

Reduce Business Intelligence cost through better data migration

Managing Business Intelligence cost is not an easy task. But poorly or inconsistently structured data can make the task even harder. Unfortunately, a lazy data migration project can generate all sorts of headaches that will cause your Business Intelligence cost to explode. Of course, bad data quality also has many other costs and risks associated with it in its own right, but I’m going to focus in on business intelligence today.

Managing Business Intelligence cost is not an easy task. But poorly or inconsistently structured data can make the task even harder. Unfortunately, a lazy data migration project can generate all sorts of headaches that will cause your Business Intelligence cost to explode. Of course, bad data quality also has many other costs and risks associated with it in its own right, but I’m going to focus in on business intelligence today.

The majority of the development cost in the current business intelligence methodology is often in getting the data out of source systems (Extract), and transforming it to make it consistent across all the various dimensions needed (Transform) and then putting it in a model that is easy to query and analyse (Load). The creation of these ETL jobs is made dramatically harder if the data in the source systems is not consistent.

Change is the challenge

Companies are not static- they grow, diversify, change strategies, reorganize, rename and restructure. They acquire other companies or are acquired. The structure and content of the data their systems often tells you this story, and if the proper work is not done to keep the data consistent with itself and the new situation then this story will be painful and complex.

Remember ten years ago when we acquired company X, but decided not to change their customer codes to our standard, so all the codes had an “X” prefixed so that we wouldn’t have duplicates? Well, those X’s are still there, and all our queries have to deal with multiple code structures.

Remember how we used to have three independent databases, one for each region, then when we went to the new data center and put everything into a single database, we ended up with multiple schemas and all those crazy views rather than consolidating into a single instance?

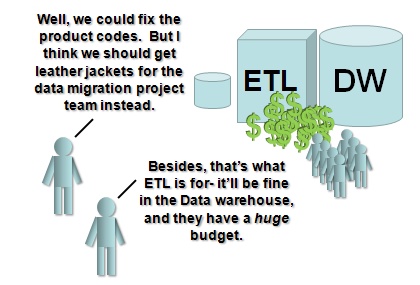

When the data migration project made the decision to reduce the project cost by not addressing data consistency, they simply pushed this cost in the future, most likely turning a one time expense into an ongoing and expanding annual business intelligence cost.

You end up with crazy ETL jobs that parse the same field in different ways depending on the date of the transaction, or on other fields- “If the transaction is before 2002, then the first digit of the product code means X, otherwise it means Y, unless of course its from the western division, who do it differently so then you need to look at field A and use the CASE statement…”

Reduce Business Intelligence cost through data cleanup

If your data is cleaner you’ll reduce business intelligence cost across your entire BI architecture.

- Reduce ETL and report development cost- both initial, and the cost of ongoing maintenance. Every change request will take more time if all the models are complex due to underlying data complexity.

- Reduce hardware costs- complex queries require more processing, and bigger servers to meet that nightly load window

- Reduce time spent reconciling numbers. Complex ETL means that chances are business intelligence reports don’t match up easily with the operational reports from the source systems. People will spend time constantly double checking these discrepancies, and it will undermine confidence in all data.

Fix the problem at the source. Not in the Business Intelligence.

Business intelligence is far too often left to fix all the issues in the source systems- and then becomes the focus of dissatisfaction when costs and delays become unacceptable.

Business intelligence is far too often left to fix all the issues in the source systems- and then becomes the focus of dissatisfaction when costs and delays become unacceptable.

I’ve heard people argue “Thats what ETL is for right? Why are you complaining?”

Assuming that the ETL will fix the sins of the source system is an inefficient and costly strategy.

Everything is a balance, perfection does not exist, but when deciding what to fix and what to leave, don’t let a lazy data migration project saddle you with years of business intelligence costs- when it’s time to bulk load data into the system, make it as right as you can.

« Data.gov Hey, where’s the RAW data? | Automated data profiling and reporting- Data quality behavioral modification? »