« Federal IT dashboard what does $75 Billion in IT spending buy | Datamartist 1.0.5 Available- with data profiling, Excel import and export to database »

Making rapid prototypes for data warehouse ETL jobs

Data warehouses and even data marts can be expensive, complex projects. They are not projects to start lightly, and they are not projects that you want to launch without doing some solid planning.

But there is a way to get a handle on the tricky parts of your data warehouse scope, and to reduce your projects overall cost.

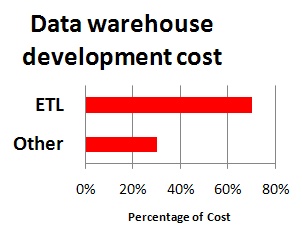

The major cost component of any data warehouse project is the Extract Transform and Load (ETL) development. Obviously every project is slightly different, but in my experience ETL will often make up in the order of 70% of the development cost. One of the drivers of this cost is the relatively high priced ETL development resources required. In the markets where I’ve hired resources, an ETL developer will often demand a 30-40% higher hourly rate than a business intelligence report writer, for example.

The major cost component of any data warehouse project is the Extract Transform and Load (ETL) development. Obviously every project is slightly different, but in my experience ETL will often make up in the order of 70% of the development cost. One of the drivers of this cost is the relatively high priced ETL development resources required. In the markets where I’ve hired resources, an ETL developer will often demand a 30-40% higher hourly rate than a business intelligence report writer, for example.

Making ETL prototypes will give you insights that can reduce cost by shortening the ETL development process and making the optimum use of those highly talented and expensive ETL resources.

What affects the cost and complexity of ETL jobs?

For any given scope, the following will have a large impact on the number and complexity of ETL jobs and therefore their cost.

For any given scope, the following will have a large impact on the number and complexity of ETL jobs and therefore their cost.

- The number of different data sources involved.

- The consistency in terms of master data definitions between systems.

- The level of data quality in the systems.

Ideally, you want to get a good handle on these three things before you hire all the ETL developers, and be confident that you are going to satisfy the users needs before millions of dollars are spent on Extract Transform and Load (ETL) jobs and business intelligence reports.

One part of the preparation needed to do this can be the creation of a proof of concept or mockup of key parts of the data warehouse ETL deliverable.

Now, there are mockups, there are prototypes, and there are “first versions”. The the most effective approach is to create a mockup or prototype that;

- Goes just deep enough into the data to:

- Establish all data sources that will be required

- Gives a high level audit of their master data and data quality

- Provides enough output that:

- End users can be supplied with example reports or cubes to get hands on

- The functional scope can be locked down with confidence on all sides.

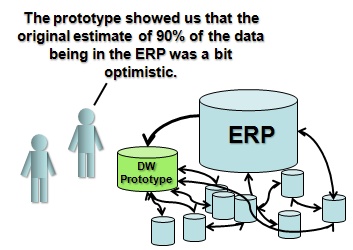

The goal of a data warehouse prototype is to learn about the underlying data, and to be able to try different data transformation techniques and approaches on the real data. The goal is not to make the finished product, nor to deliver actually usable reports to end users, although it may be to generate an example result for users to validate.

An example might be to create a prototype to calculate total sales by segment for a period under a new customer segmentation. This would identify if the segmentation rules that have been suggested actually result in the expected segementation of sales data, and if the fields involved are complete and correctly populated in the source systems.

A prototype should focus on the dimensions and data sources that are expected to be the most difficult, and involve multi-source integration. Don’t spend time prototyping the easy stuff.

When you are making a prototype remember its a one-time development. Manual steps and doing some “data cleaning by hand” are perfectly reasonable- its what you learn from the prototype, not how you learn it that is important. Take a snapshot, or a sample of the various tables and put them in a sandbox environment where you can manipulate them quickly and easily.

The whole point is to move quickly, get lots of feedback from users, and be able to avoid unpleasant discoveries during the actual data warehouse development.

If you find a data quality issue, and it’s a tough one, then just remove those rows and continue on- remember you don’t have to solve all the problems in the prototype- you need to identify them. Be open with your users about what the exercise is about- and that it is a very rough pass, and a mockup.

How much could this impact cost?

If you can identify issues during the prototype then you can solve them before all the ETL development resources are brought onto the project.

If you do not do a prototype, and find a data quality issue that requires some back and forth with the business, every week of delay will probably represent thousands or tens of thousands of dollars, with the project team waiting on the resolution before being able to resume coding the ETL jobs in question.

So in summary, making prototypes will:

- Reduce the risk of scope creep because users have actually seen and “touched” a mockup of the final output.

- Reduce the amount of rework in ETL code because different data transformation approaches can be tested early.

- Reduce the risk of the expensive ETL development phase of the project slipping due to unknown data quality issues.

The right tool for ETL Prototypes.

Often prototypes are built in a combination of Excel, MS Access or other databases. These tools can work, but excel has serious issues handling larger data sets, and database development is often cumbersome- the idea is to make a prototype, not actually build the SQL code. Things like different data types, field formats, column naming rules etc. between different source databases often frustrate attempts to do something quickly.

Obviously another option is the enterprise ETL tools themselves- but the cost, complexity and overhead of these tools again makes them better suited to the production system- not a quick mockup or rapid prototype.

What you need to make an ETL prototype is an easy to use ETL tool that provides the basic type of functionality and graphical user interface of high end ETL tools, but also allows a more flexible treatment of data types, all with the ability to pull data from multiple sources, including more informal sources like Excel spreadsheets.

The Datamartist tool was created to provide exactly such a data scratchpad, ideal for rapid prototyping data transformations. It lets you profile your data and build data transformations using a visual, block and connector interface. But it represents a clear, focused and easy to use ETL tool, without all the feature bloat, cost and server configuration required by many expensive enterprise ETL solutions.

Download the free trial, and see for yourself.

« Federal IT dashboard what does $75 Billion in IT spending buy | Datamartist 1.0.5 Available- with data profiling, Excel import and export to database »

Do you have numbers or references to back up your 70% figure? My management is focused on the features of the analytics server and is giving minimal resources to ETL. I would love to show them industry averages.

Nope- that percentage is from my experience, and experiences reported to me by others. I worked for about 6 years on various projects, and kept budget figures for all of them (always trying to improve the estimation side)- overall the ETL was consistently in that range. I’ve heard others offer the same figure, or concur, but no hard data. Which is a pity, because I was always facing exactly what you described- management was always willing to pay for the flash on the front end, but wanted to know why the data transformation was so expensive.

The problem, of course, with trying to compare from project to project is that they are all different- very complex front ends in situations where there is only one source system, data quality is good and a robust master data process has been in place will change the ratio significantly, for example.