Any discussion on data migration needs to include data quality as a core topic. Migrating data from one set of applications to another, particularly when the applications were never designed to interact, and share little or no common structure or definitions is a complex task. This task is made even more complex by the data quality issues that almost always exist in legacy systems.

In part 1, we set the stage for data migration with a lighthearted look at the pitfalls of badly migrated data. In this post, I’m going to talk about data quality, and give some perspective on why its so important to be thinking about data quality every part of the way during a data migration project.

It’s not just a data migration project. It’s a data quality project feeding into a data migration project.

Because the destination system will often have a “stricter” data model, or may use a broader data set due to enhanced features it is likely that bad quality data in the legacy system that is “not causing any problems” will become an issue in the new system.

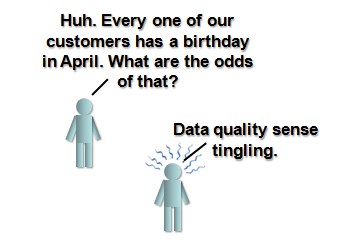

Lets look at a trivial example. In the existing customer relation management system, there is a mandatory field for the customers birth date. Sales people are supposed to use this to help maintain a personal relationship. Its mandatory, but doesn’t get used by the system, except for a reminder getting sent to the sales person on the customers birthday.

So sales people have to enter in the date, it gets marked as missing data if they don’t.

So what happens? They fill in some random date, and ignore all the reminders.

But in the new system, the customer relation management system sends an email with a discount code to the customer on their birthday, wishing them a happy one and offering them a discount. The birthday in the system is now directly linked to business transactions with financial impact.

Birthday is now a field that suddenly needs to be addressed.

This is perhaps a silly example, but there can be more serious ones- the bottom line is that often the data migration project will be required to actually improve data quality at the same time. Its two projects in one- make sure you’ve budgeted for it, or you’re going to have to somehow pull them both off for the price of one. Not easy.

It seems obvious, yet remarkably, data migration project budgets seem to be created based on the assumption of near perfect data.

So the first step in any data migration project- in fact ideally before the project budget even gets fixed:

Do a first pass data quality audit as early as possible.

Know what you have. Profile the data in the legacy systems so that when it comes time to migrate the data, you know where the problem spots are in the key fields.

What do I mean by first pass? Well, this is early, so you may not have access to a mature design for the target system (the “to be” application architecture and configuration) so that means you may not be able to focus on the specific data that the new system will be requiring, and in what format, or what granularity it is required.

Never the less, you can be pretty sure that things like order history, customer information, product attributes etc. will be there, so your quality audit of the legacy system is going to pay off.

As more detailed specifications are created, and a better understanding of both the source and target systems evolves, you will need to continue to assess the impact of data quality on your migration efforts.

But you may find that even just doing a data quality audit of the legacy system raises a whole new set of questions. The adventure often starts with a bunch of blank looks when you ask for the legacy systems data dictionary.

So next up- Establishing the meta data model. Because in migration one of the first steps in getting somewhere is knowing where you are starting from.

This post is part 2 of a series- part 1 is here. and part 3 is here.