Migrating data is complicated. It’s particularly hard because of course it’s not just a physical move. Data definitions are different from the legacy to the new systems. To get this right you need to manage these data definitions.

Migrating data is complicated. It’s particularly hard because of course it’s not just a physical move. Data definitions are different from the legacy to the new systems. To get this right you need to manage these data definitions.

In this post, I’m going to discuss some things to keep in mind during this process. As with the other posts in this series, I’m not going to be talking about specific tools or getting into super technical discussions.

I’m going to assume that you do not have millions of dollars to spend on the state of the art master data management software and its configuration. Instead, I’m going to present the high level concepts, and focus on some of the change management aspects. How can you focus on what’s important, and avoid having master data derail your data migration project?

This post is number four in a series. If you are a linear type, and want to read all the posts in order, part 1 is here, then two, and three.

There is a reoccurring theme here- just as when I discussed in part two about how a data migration project is also a data quality project because often existing data quality issues must be resolved;

A data migration project is also a master data management project.

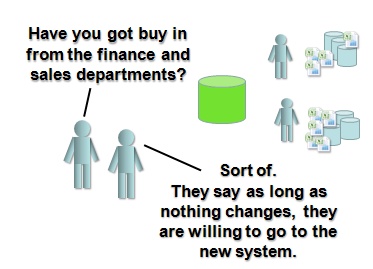

Since often the legacy systems were department based, the concept of formal data definitions, and the processes needed to manage them across functional boundaries simply don’t exist. But if your data migration project is moving master data from multiple legacy systems into a single new ERP application, you are going to need at least a basic set of processes to manage it going forward.

Welcome to change management, cross functional teams and data governance committees.

Data migration means integrating data that used to live apart, and is owned by different groups within the company.

Often, a data migration project is part of a new ERP project to combine a number of legacy systems (Finance, Sales, Manufacturing) into a single integrated application. Because all of the legacy systems were independent, often the data definitions used are very different, and any mappings or reconciliations exist only at a high level if at all.

So what does this mean?

- There will be technical challenges:

- Data will be in strange and wonderful (or at least many) databases from various vendors.

- Data will have different codes and structures

- Data will be stored at different granularity

- Data will be stored in different units

- You may have things like time zones, date formats etc. to deal with

- There will also be actual definition type challenges

- Although something is refered to as “X” in more than one system, the definition may be very different.

- Different functional groups look at data in fundamentally different ways- engineers vs accountants, sales people vs human resources professionals.

- People will be attached professionally, emotionally and politically to their definition and view of things.

The technical challenges are significant- but its the definition challenges that are often the most difficult, because they involve people. These often represent fundamental changes to the processes within a company, and require coordination across many departments, and the political empires that often exist. It takes a firm hand, and good executive support.

Where to start? Well, know some of the potential pitfalls, and define realistic goals clearly.

Just because we call something the same thing doesn’t mean its the same thing.

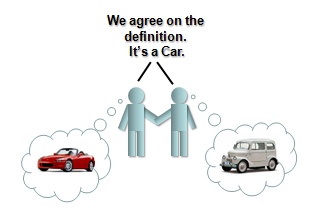

I’ve seen people go into a meeting, have a one hour discussion regarding definitions, come out with a high level list of the metrics and a real belief that they agree on everything. They tell you “Your data migration is going to be straightforward, we’re pretty much on the same page.”

I’ve seen people go into a meeting, have a one hour discussion regarding definitions, come out with a high level list of the metrics and a real belief that they agree on everything. They tell you “Your data migration is going to be straightforward, we’re pretty much on the same page.”

Don’t be fooled by this.

After 30 minutes of digging into the details in the two different systems, you will realize that there are lots of things they don’t agree on.

Why did they think that they agreed? Because they spoke the same words, but meant completely different things. I say, a car has four wheels- you nod. We’re done. But was it a sports car, a sedan, an SUV? I say car- I see my car, you say car you see your car. We nod. Meeting over.

Doesn’t work- you have to dig into the details, and put them on the table.

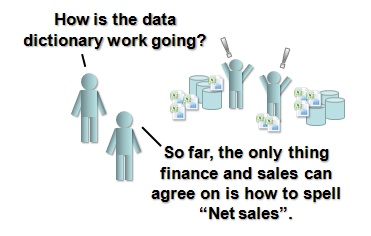

Details are important, and everyone hates them. Find the detail people and get them together.

No one likes the details, but the heart of any master data management work is in those nuts and bolts. Start to dive into the details and most eyes will glaze over. Find the people who care because the details affect their daily job.

What you need to do is make a “data definitions” working group made up of people from multiple departments.

- Get at least one detail oriented team member from each department

- Make sure they are respected and experienced- you need people who know their stuff.

- Make sure it’s clear to all departments that the output of this working group will be the data dictionary used by the new system.

- Add in one or two data people- someone who can query all the systems involved, and can both answer the detailed questions from the group, but also actually validate concepts as they are created by making prototype extractors and transformations

- If you are missing key expertise, bring it in. Even if its your core business, if you think you’re not following industry best practices for your definitions, nomenclature or processes, don’t just “keep doing it the way we do it”.

- take advantage of industry standards for naming and coding, this can sometimes also be a method for avoiding internal battles- we’re going to follow the standard, not have a homemade coding system.

So now you have to give this working group a goal.

The solution is not always to have only one definition in the end.

What? Isn’t that the whole point?

Yes it is when there really is only one definition, but don’t chase after “a single version of the truth” ignoring the reality of what people need, and what metrics are used in the business.

Just because finance and manufacturing calculate “X” in two different ways does not necessarily mean that you have to stop using one of the definitions. Both groups might have a perfectly valid reason to calculate it they way they do. The key is- don’t use just one name for it. Its NOT “X”.

There are two metrics “X1” and “X2” and they have different definitions for different reasons. Put them both in the dictionary, make it clear what the differences are, and when each measure is used.

It’s not a battle. Everyone is on the same team here.

People of all sorts often tend to see things in terms of winning and losing.

People of all sorts often tend to see things in terms of winning and losing.

You might find people thinking in terms such as “Finances definition for “X” has to win. We know how it is supposed to be calculated, and we are going to set it right.” Avoid framing the debate as a competition- present it from the start in an inclusive way;

“We need to list all the metrics and definitions involved, as used by all departments- and if there are duplicates we’ll consolidate, but overall we want to capture them, and ensure they are available. We can all acknowledge that each department has different needs owing to their functional area.”

Create a central repository for the dictionary- make it public from the start.

Now, if you have the million dollar master data management (MDM) softward solution chances are it has this functionality and more. If you don’t, then you should plan to make an internal web site or equivalent means of providing access.

I won’t go into the detail of what an entry in this data dictionary actually consists of, partly because this post is already past my usual length limit, but mostly because there is no hard and fast rule. You can go anywhere from a basic dictionary (that describes what each metric is in english) all the way to a highly sophisticated meta data management tool that interacts directly with your extract transform and load (ETL) logic actually changing how numbers are transformed in your migration jobs. (I’m not sure I’d go that far, even with that mythical million dollar tool.)

The bottom line is, be pragmatic- and be public.

In my experience it has been critical to create a published, work in progress dictionary from the start of the process. The ideal is to have an online database that allows contributors to edit and comment directly. This is important for a number of reasons:

- It makes the process transparent- everyone can see what the definitions are going to be.

- It makes the scope and complexity of the challenge visible. By having all the definitions on line, everyone can see just how many definitions there are, and how much detail there is.

- It tracks versioning and ensures that all the work is captured. Don’t have people with lots of excel files on their local hard drive holding up your progress.

- It lets you publicly assign responsibility for each defintion. DO NOT have a librarian who is reponsible for all definitions – you want to have a process owner from each department assigned to each definition. The ideal number is hard to find and will depend on the company, too few and you don’t have buy in, to many and it’s not managable.

- It allows metrics to be viewed by everyone. Which department has defined and approved the most definitions? Who is holding up the effort? A bit of inter-departmental competitiveness might be useful in this regard.

Define the processes needed to maintain and expand your data dictionary into the future

Finally, as you capture the definitions you need to perform the data migration, the ideal is to define and kick off the processes and required organisational groups that will continue to manage the dictionary after the project go live.

In master data management circles this is often called data stewardship and its critical that you have at least a basic formal process. In the past, when a given chunk of data was only being used by one department, changing how it was defined would be understood by the department, and could be managed somewhat informally. Now, when the same bit of data might be used accross the company since everyone is in the same system, changing that query or how the value is calculated at the request of one department could create all sorts of issues for others. There must be a process in place to validate the change, and to communicate it so that everyone in the system can be aware when the numbers shift beneath them.

In summary, it’s really really hard.

The bottom line is, master data management requires a pragmatic, step by step approach, and is never finished. Manage everyones expectations, and be very careful as to what you promise your data migration project can achieve in this area.

Very large big bang type projects are probably not a good idea, even if you are armed with the latest and greatest tools. As Wikipedia states in its criticism section:

The value and current approaches to MDM have come under criticism due to some parties claiming large costs and low return on investment from major MDM solution providers.

Much like massive data warehouse projects, risks are high, particularly if you don’t have near fanatical support from the very top.

The best approach is most likely to build step by step, clearly defining what has to be defined for the data migration project at hand, and putting in place realistic processes that can be augmented and enhanced over time as your company begins to “grok” why having a data dictionary is important.

It’s a long road, but ignoring master data management in your data migration project adds risks to your projects success, and makes it even harder for future master data efforts to succeed.

Just as a data migration project is an opportunity to affect data quality for the better, it can also be a positive influence on your master data.

Next post- I’m going to wrap up this series with some discussion about how important it is to forge a collaboration between the information technology (IT) department and the various departments within a business, and how IT can play an important role in building bridges through the silos- both data silos and others

Comments

5 responses to “Data migration Part 4- Creating a data dictionary how to tackle master data management”

James – I’ve really enjoyed reading this series, some excellent practical tips included here, great content.

I actually wrote about something similar here: http://bit.ly/8kCNio and I’ve used the same tool (DabbleDB) to create a public Data Dictionary in the past.

I really get your pragmatic point of view here, yes there are expensive metadata re-engineering solutions but quite often you just need something simple that the entire team can use and get started with, I find that DabbleDB is a really simple option as it allows files to be uploaded, images, links to external docs and of course standard rows and attributes of data, if you have any further suggestions please add to this thread so I can update our readers.

Would be great to interview you and do a feature for our readers on Data Migration Pro, will be in touch.

Thanks Dylan!

DabbleDB is a great tool for that sort of dataset I bet (and bonus points for being a Canadian company).

Definately the ability to share and link documents etc. is useful. It strikes me that for the project stage at least this could all be handled by a Wiki of some sort. So many open source options these days, its really possible to imagine setting up something very powerful very quickly.

Thanks for the comment!

Thank you for the post. It is very informative for a begginer like me . Please keep up the good work.

Great Article..It was very informative..I need more details from your side..include some tips..I am working in Cloud Erp Software Company In Chennai

This is a fantastic article. Very informative and very relevant. Thank you!