« Datamartist V1.2 now available | Data.gov Hey, where’s the RAW data? »

Let’s admit it- centralized business intelligence alone just doesn’t work

Posted by James Standen on 3/03/10 • Categorized as Business Intelligence Architecture,Meta Data,Reality Check

One version of the truth. Data warehouses. Centralized business intelligence teams. This has been the best practice for business intelligence for the last two decades.

Users taking the initiative with data has been seen as the enemy of a successful business intelligence program.

This needs to change. In a world of ever increasing data volumes and complexity, faster business processes and more data savvy knowledge workers, a purely centralized solution is doomed to fail.

A consensus is starting form that the best architecture is one that blends centralized with more distributed and (gasp) free form, user guided methods. In fact, when we look at what actually exists in most enterprises and take into account the unofficial shadow systems, we’re already there, but in two separate camps that aren’t talking.

The amount of freedom to allow ranges from letting the users have at it, to opening up the possibility of departmental data marts, but the buzz out of TDWI clearly indicates a growing acknowledgement that a rigid top down architecture is not tenable.

What are Oracle, IBM, Microsoft SAP and SAS (who own more than 70% of the Business intelligence market share) advising as being the right approach?

They advocate big architectures, centralized meta data management, big databases, lots of command and control. They talk about “self serve”- but they mean to existing reports or report interfaces. To be fair, they need to sell the tools they have.

For a refreshing change from this, I very much enjoyed reading Mark Madsens keynote at TDWI “Stop paving the cow path”.

We enjoy reading things that we agree with, and I nodded my way through his slide deck.

In his presentation, Madsen points out that centralization won’t work, because it:

- Creates bottlenecks

- Causes scale problems

- Enforces a single model

Bottlenecks and Scale

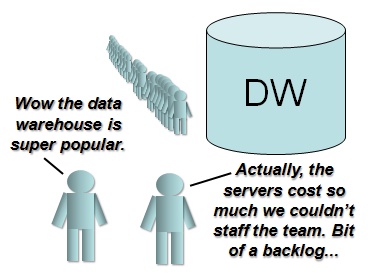

In a centralized system, all requests go into the queue, and the backlog starts piling up.

In a centralized system, all requests go into the queue, and the backlog starts piling up.

The size of the department/team that is responsible for making it all work becomes the number one bottleneck.

Are there enough people able to prioritize and analyse the payback on analysis requests? Because in a centralized organisation, the gatekeepers are necessary, and how do they KNOW which requests are the good ones? How does anyone really know?

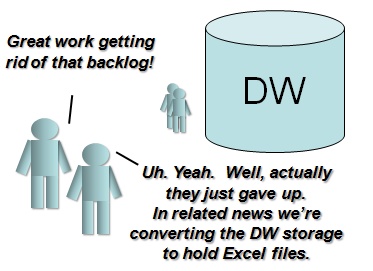

I’m not sure any company can afford to staff a centralized data warehouse team to be able to handle all the requests as they are generated. Prioritization therefore becomes a single point of failure. Get it wrong, and it can be all wrong. In a more distributed structure, decisions are made at multiple points, some good, some bad, but diversity will often bring more innovative and experimental behavior, resulting in new avenues of analysis that a overly static central team might avoid.

For an indication as to how well users think the central team is listening to them, take a look at how many excel spreadsheets there are around, and how many shadow systems grow like mushrooms throughout the standard enterprise. People think their analysis is important, and even if IT won’t or can’t they find a way to try to get it done.

In terms of scaling, I can hear the technical types starting to explain about how their servers, infrastructure and approach scales- diagrams and MPP theories pulled out with pride. “Centralizing lets it be scalable- what are you talking about?”

In terms of scaling, I can hear the technical types starting to explain about how their servers, infrastructure and approach scales- diagrams and MPP theories pulled out with pride. “Centralizing lets it be scalable- what are you talking about?”

Maybe. But there are traps here too- centralized organisations always want to put everything in one database. Having everything in a single repository starts to become the goal- not the cost efficient analysis of the right data. Not centralizing is very scalable- stand alone machines can just be added for ever.

It may in fact be that data can remain distributed and diverse at certain levels of detail, and more federated approaches can be used, resulting in cheaper hardware and software, and more importantly avoiding a lot of really hard master data management work. Consolidation can sometimes happen at summary levels that make sense from a business point of view- not just blindly following the “one version” mantra.

Enforcing a single model

Isn’t having a single data model good? We’ve been told that it is. In a way, this is the holy grail.

But is there a single, correct, slowly changing model that satisfies everyone in an organisation?

Why do I say slowly changing? Because if there is only one for the entire enterprise, it will change slowly, if at all.

Even if you happen to understand what the right model is, (and by model I mean data model, analysis model, process model, any model) and you manage to implement it while its still the right model, in a year its not going to be the right one. And a centralized, high cost, committed architecture won’t and can’t adapt. You’ll still be paying the mortgage on the data warehouse.

Very large centralized models cannot be comprehensive and up to date, because to be comprehensive they have to be so complex as to be difficult to change, and as a result they quickly become out of date. It’s sort of a Heisenberg uncertainty principle for common meta data repositories.

“Giving people their flying cars”

Madsen of course doesn’t solve the entire problem in his keynote, but he points out some directions that make sense. And his graphic depicting a happy couple blasting off in their very locally controlled flying car sends the message- users can do their analysis without central oversight or interaction. (Although, one would imagine that some sort of air traffic control would be necessary, and the refueling stations for the cars would probably be run centrally- we’re not advocating anarchy here.)

Having built data warehouses, established a data warehouse competency center, and provided business intelligence services for thousands of users, I can testify from first hand experience that centralizing alone is just not going to work. People who worked with me a decade ago will remember the significant amount of time spent creating meta data repositories. Are they still needed? Yes. But they simply can’t do everything. Use them with care, and be wary of your ambition for them.

First, accept the fact that users are not mindless consumers. Learn from the fact that they use excel constantly, and they don’t just read reports- they build things, adding data, fixing data, re-organizing data. They think. Give them tools that include them as part of the data processing.

Business intelligence cannot not be solely a process where formal requirements are gathered, followed by a publishing exercise of delivering the reports on time.

Are there some reports where this is the case? Sure. Monthly management reports and dashboards shouldn’t change every month. The model can work for some amount of the delivered data analysis.

The entire architecture isn’t getting ripped out- but if the new architecture is successful in bringing the pent up demand that is currently being satisfied by shadow systems into the light, then distributed, user centric, user driven business intelligence will become a significant percentage of the total.

But the old way of thinking has to change. Don’t “Crack down on shadow systems”.

Find a way to provide better service, be it self, assisted or centralized service that makes the shadow systems simply a less effective way to do it.

The existence of shadow systems, and the extent of them, is the clearest argument that centralized business intelligence alone is simply not up to the task.

Once you have people doing whatever they want in the self directed part of your architecture, DO watch what they are doing- not to control it, but to learn from it. Everyone constantly re-structuring the customer dimension? Obviously it’s time for an update. By watching what users edit, what gaps they fill in, you can find the data quality issues, identify the fuel to put on the self directed fire.

Tools like Lyzasoft, our own Datamartist tool, and Microsoft’s Power Pivot in Excel 2010 and others are all going to drive power to the users, and introduce a new balanced approach between centralized and local parts of business intelligence architectures. Visualization tools like Tableau will further give people the ability to create powerful, consumable analysis in a self serve mode.

Will there be challenges with data quality, risk management and wasted time doing pointless analysis? Most likely.

Will the information we gather and the payoff from the successful bottom up analysis efforts make it hugely valuable overall? I for one think so.

We need to learn to trust our colleagues with the data, while at the same time managing the reality of data quality and risk of errors that more free form techniques can create.

Companies that include both top down and bottom up capabilities in their architecture will stop wasting time fighting internally, and start to take advantage of all that data.

« Datamartist V1.2 now available | Data.gov Hey, where’s the RAW data? »

We see a concept called “Divisional BI” emerging. Not centralized, but not all the way decentralized to the leaves of the organization either. Part of the problem is this “single model” notion as you identified – a whole enterprise is too big for that. There’s not enough shared interest. Think General Electric, which at one point owned a TV broadcaster, and a Jet Aircraft Engines division and a finance division – what could they possibly have in common that would warrant a single model. I think this is true even at much smaller organizations also down to maybe 500M$ revenue companies, most of which were still build by acquisitions.

Divisional BI is the recognition that there’s a tier in the org structure where all the subdivisions have enough common ground that a shared DW/BI infrastructure starts to make sense.

The argument is a bit long, so ….one good blog post deserves another: http://blog.oco-inc.com/business-intelligence/analytics-for-the-business/bid/31217/Divisional-BI-Not-Enterprise-BI